3. Installing OSM

3.1. Pre-requirements

In order to install OSM, you will need, at least, a single server or VM with the following requirements:

MINIMUM: 2 CPUs, 6 GB RAM, 40GB disk and a single interface with Internet access

RECOMMENDED: 2 CPUs, 8 GB RAM, 40GB disk and a single interface with Internet access

Base image:

Ubuntu20.04 cloud image (64-bit variant required) (https://cloud-images.ubuntu.com/focal/current/focal-server-cloudimg-amd64.img)

Ubuntu20.04 server image (64-bit variant required) (http://releases.ubuntu.com/20.04/)

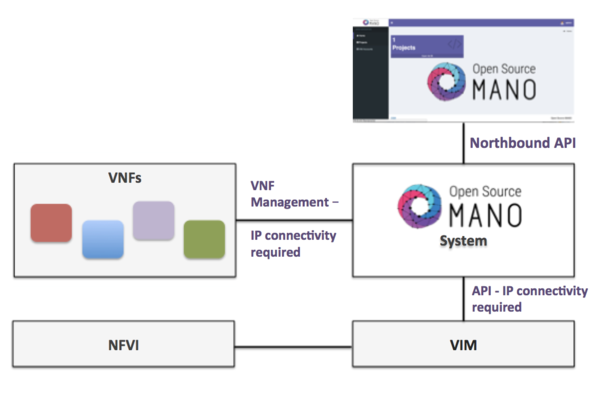

In addition, you will need a Virtual Infrastructure Manager available so that OSM can orchestrate workloads on it. The following figure illustrates OSM interaction with VIMs and the VNFs to be deployed there:

OSM communicates with the VIM for the deployment of VNFs.

OSM communicates with the VNFs deployed in a VIM to run day-0, day-1 and day-2 configuration primitives.

Hence, it is assumed that:

Each VIM has an API endpoint reachable from OSM.

Each VIM has a so-called management network, which provides IP addresses to VNFs.

That management network is reachable from OSM.

3.2. Installation procedure

Once you have one host available with the characteristics above, you just need to trigger the OSM installation by:

wget https://osm-download.etsi.org/ftp/osm-13.0-thirteen/install_osm.sh

chmod +x install_osm.sh

./install_osm.sh

This will install a standalone Kubernetes on a single host, and OSM on top of it.

TIP: In order to facilitate potential troubleshooting later, it is recommended to save the full log of your installation process:

wget https://osm-download.etsi.org/ftp/osm-13.0-thirteen/install_osm.sh

chmod +x install_osm.sh

./install_osm.sh 2>&1 | tee osm_install_log.txt

You will be asked if you want to proceed with the installation and configuration of LXD, juju, docker CE and the initialization of a local Kubernetes cluster, as pre-requirements. Please answer y.

Optionally, you can use the option --k8s_monitor to install an add-on to monitor the K8s cluster and OSM running on top of it.

./install_osm.sh --k8s_monitor

3.2.1. Other installer options

You can include optional components in your installation by adding the following flags:

Kubernetes Monitor::

--k8s_monitor(install an add-on to monitor the Kubernetes cluster and OSM running on top of it, through prometheus and grafana)PLA:

--pla(install the PLA module for placement support)

Example:

./install_osm.sh --k8s_monitor --pla

OSM installer includes a larger number of install options. The general usage is the following:

./install_osm.sh [OPTIONS]

With no options, it will install OSM from binaries.

Options:

-y: do not prompt for confirmation, assumes yes

-r <repo>: use specified repository name for osm packages

-R <release>: use specified release for osm binaries (deb packages, lxd images, ...)

-u <repo base>: use specified repository url for osm packages

-k <repo key>: use specified repository public key url

--k8s_monitor: install the OSM kubernetes monitoring with prometheus and grafana

-m <MODULE>: install OSM but only rebuild the specified docker images (NG-UI, NBI, LCM, RO, MON, POL, KAFKA, MONGO, PROMETHEUS, PROMETHEUS-CADVISOR, KEYSTONE-DB, NONE)

-o <ADDON>: do not install OSM, but ONLY one of the addons (vimemu, elk_stack) (assumes OSM is already installed)

--showopts: print chosen options and exit (only for debugging)

--uninstall: uninstall OSM: remove the containers and delete NAT rules

-D <devops path> use local devops installation path

-h / --help: prints help

3.3. Other installation methods

3.3.1. Remote installation to an OpenStack infrastructure

OSM could be installed to a remote OpenStack infrastructure from the OSM standard installer. It is based on Ansible and it takes care of configuring the OpenStack infrastructure before deploying a VM with OSM. The Ansible playbook performs the following steps:

Creation of a new VM flavour (4 CPUs, 8 GB RAM, 40 GB disk)

Download of Ubuntu 20.04 image and upload it to OpenStack Glance

Generation of a new SSH private and public key pair

Setup of a new security group to allow external SSH and HTTP access

Deployment of a clean Ubuntu 20.04 VM and installation of OSM to it

Important note: The OpenStack user needs Admin rights or similar to perform those operations.

The installation can be performed with the following command:

wget https://osm-download.etsi.org/ftp/osm-13.0-thirteen/install_osm.sh

chmod +x install_osm.sh

./install_osm.sh -O <openrc file/cloud name> -N <OpenStack public network name/ID> [--volume] [OSM installer options]

The options -O and -N are mandatory. The -O accepts both a path to an OpenStack openrc file or a cloud name. If a cloud name is used, the clouds.yaml file should be under ~/.config/openstack/ or /etc/openstack/. More information about the clouds.yaml file can be found here

The -N requires an external network name or ID. This is going to be the OpenStack network where the OSM VM is going to be attached.

The --volume option is used to instruct OpenStack to create an external volume attached to the VM instead of using a local one. This may be suitable for production environments. It requires OpenStack Cinder configured on the OpenStack infrastructure.

Some OSM installer options are supported, in particular the following: -r -k -u -R -t. Other options will be supported in the future.

3.3.2. Charmed Installation

Some cases where the Charmed installer might be more suitable:

Production ready: HA, backups, upgrades…

Lifecycle management: Configuration of OSM Charms, scaling…

Integration with other components: Via relations.

Pluggable: Do you want to use an existing Kubernetes? Or an existing Juju controller? Or an existing LXD cluster? You can.

3.3.2.1. Standalone

wget https://osm-download.etsi.org/ftp/osm-13.0-thirteen/install_osm.sh

chmod +x install_osm.sh

./install_osm.sh --charmed

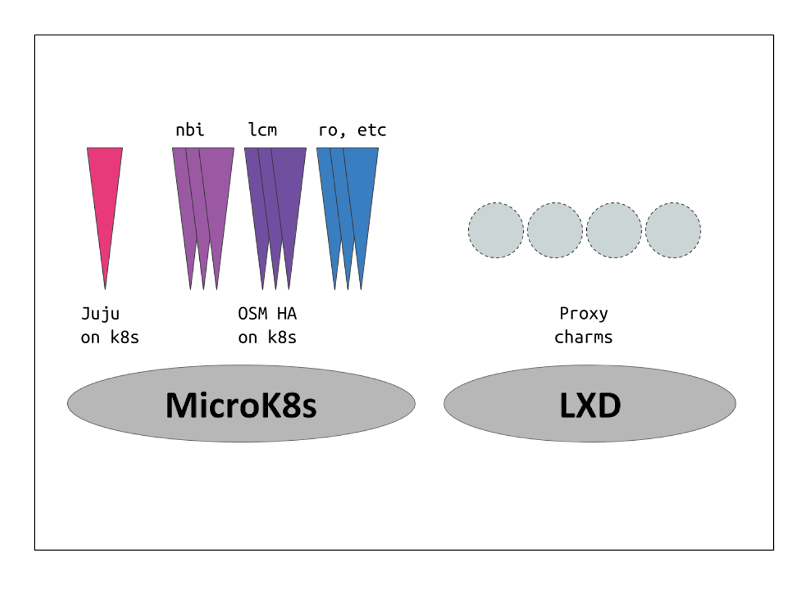

This will install OSM on microk8s using Charms.

3.3.2.2. External

For the installation using external components the following parameters can be added:

wget https://osm-download.etsi.org/ftp/osm-13.0-thirteen/install_osm.sh

chmod +x install_osm.sh

./install_osm.sh --charmed --k8s ~/.kube/config --vca <name> --lxd <lxd-cloud.yaml> --lxd-cred <lxd-credentials.yaml>

The values for the parameters are the following:

k8s: This will be the path of the kubeconfig file of your external Kubernetes.vca: This will be the name of the controller already added to your Juju CLI.lxd: This will be the path to thecloud.yamlfile of your external LXD Cluster.lxd-cred: This will be the path to thecredential.yamlfile of your external LXD Cluster.

For more information on the LXD cloud.yaml and credential.yaml files consult here

3.3.2.3. OSM client in Charmed installations

Once the installation is over, follow these instructions to use the osmclient:

NBI_IP=juju status --format yaml | yq r - applications.nbi-k8s.address

export OSM_HOSTNAME=$NBI_IP

To have the osm client always available include it in your .bashrc:

NBI_IP=juju status --format yaml | yq r - applications.nbi-k8s.address

echo "export OSM_HOSTNAME=$NBI_IP" >> ~/.bashrc

3.3.2.4. Scaling OSM Components

3.3.2.4.1. Scaling OSM Charms

Scaling or replicating the amount of containers each OSM component has can help both with distributing the workloads (in the case of some components) and also with high availability in case of one of the replicas failing.

For the High Availability scenario Charms will automatically apply anti-affinity rules to distribute the component pods between different Kubernetes worker nodes. Therefore for real High Availability a Kubernetes with multiple Worker Nodes will be needed.

To scale a charm the following command needs to be executed:

juju scale-application lcm-k8s 3 # 3 being the amount of replicas

If the application is already scaled to the number stated in the scale-application command nothing will change. If the number is lower, the application will scale down.

3.3.2.4.2. Scaling OSM VCA

For more detailed information about setting up a highly available controller please consult the official documentation.

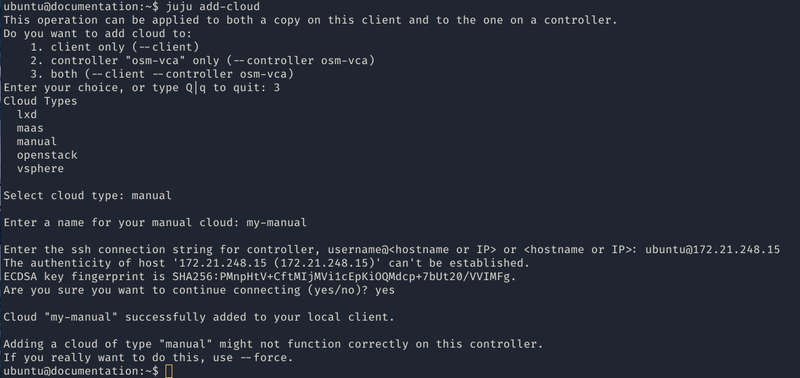

Nevertheless, one way of setting up a manual HA Juju Controller which will act as VCA will be demonstrated.

First of all, the set up of 3 machines with the latest LTS of Ubuntu and at least 4GB of RAM will be needed. The machine from which the controller will be created will need SSH access to the previously mentioned 3 machines.

Afterwards, the manual cloud will be added, executing the first command and following the steps shown in the screenshot.

juju add-cloud

Once the add-cloud command is finished. The following commands will be executed to create the controller, add the remaining machines and enable HA.

juju bootstrap my-manual manual-controller

juju switch controller

juju add-machine ssh:ubuntu@<ip-second-machine>

juju add-machine ssh:ubuntu@<ip-third-machine>

juju enable-ha --to 1,2

Once the juju status shows all machines in a “started” state, the HA controller is initialized.

To install Charmed OSM with the HA controller the following argument will be passed:

./install_osm.sh --charmed --vca manual-controller

3.3.2.5. Using external DBs

Charmed OSM supports the usage of external DBs. For this purpose, relations to the DBs should be removed:

juju remove-relation nbi mongodb-k8s

juju remove-relation lcm mongodb-k8s

juju remove-relation ro mongodb-k8s

juju remove-relation mon mongodb-k8s

juju remove-relation pol mariadb-k8s

juju remove-relation pol mongodb-k8s

juju remove-relation pla mongodb-k8s

juju remove-relation keystone mariadb-k8s

juju remove-application mongodb-k8s

juju remove-application mariadb-k8s

Now, add the configuration to access the external DBs:

juju config nbi mongodb_uri="<MongoDB URI>"

juju config lcm mongodb_uri="<MongoDB URI>"

juju config ro mongodb_uri="<MongoDB URI>"

juju config mon mongodb_uri="<MongoDB URI>"

juju config pol mysql_uri="<MySQL URI>"

juju config pol mongodb_uri="<MongoDB URI>"

juju config pla mongodb_uri="<MongoDB URI>"

juju config keystone mysql_host="<MySQL Host>"

juju config keystone mysql_port="<MySQL Port>"

juju config keystone mysql_root_password="<MySQL Root Password>"

3.3.3. How to upgrade components from daily images

Upgrading a specific OSM component without upgrading the others accordingly may lead to potential inconsistencies. Unless you are really sure about what you are doing, please use this procedure with caution.

One of the commonest reasons for this type of upgrade is using your own cloned repo of a module for development purposes.

3.3.3.1. Upgrading RO in K8s

This involves upgrading (ro):

git clone https://osm.etsi.org/gerrit/osm/RO

#you can then work in the cloned repo, apply patches with git pull, etc.

docker build RO -f RO/Dockerfile.local -t opensourcemano/ro:develop --no-cache

kubectl -n osm patch deployment ro --patch '{"spec": {"template": {"spec": {"containers": [{"name": "ro", "image": "opensourcemano/ro:develop"}]}}}}'

kubectl -n osm scale deployment ro --replicas=0

kubectl -n osm scale deployment ro --replicas=1

# In order to make this change persistent after reboots,

# you will have to update the file /etc/osm/docker/osm_pods/ro.yaml to reflect the change

# in the docker image, for instance:

# sudo sed -i "s/opensourcemano\/ro:.*/opensourcemano\/ro:develop/g" /etc/osm/docker/osm_pods/ro.yaml

# kubectl -n osm apply -f /etc/osm/docker/osm_pods/ro.yaml

3.3.3.2. Upgrading LCM in K8s

git clone https://osm.etsi.org/gerrit/osm/LCM

#you can then work in the cloned repo, apply patches with git pull, etc.

docker build LCM -f LCM/Dockerfile.local -t opensourcemano/lcm:develop --no-cache

kubectl -n osm patch deployment lcm --patch '{"spec": {"template": {"spec": {"containers": [{"name": "lcm", "image": "opensourcemano/lcm:develop"}]}}}}'

kubectl -n osm scale deployment lcm --replicas=0

kubectl -n osm scale deployment lcm --replicas=1

# In order to make this change persistent after reboots,

# you will have to update the file /etc/osm/docker/osm_pods/lcm.yaml to reflect the change

# in the docker image, for instance:

# sudo sed -i "s/opensourcemano\/lcm:.*/opensourcemano\/lcm:develop/g" /etc/osm/docker/osm_pods/lcm.yaml

# kubectl -n osm apply -f /etc/osm/docker/osm_pods/lcm.yaml

3.3.3.3. Upgrading MON in K8s

git clone https://osm.etsi.org/gerrit/osm/MON

#you can then work in the cloned repo, apply patches with git pull, etc.

docker build MON -f MON/docker/Dockerfile -t opensourcemano/mon:develop --no-cache

kubectl -n osm patch deployment mon --patch '{"spec": {"template": {"spec": {"containers": [{"name": "mon", "image": "opensourcemano/mon:develop"}]}}}}'

kubectl -n osm scale deployment mon --replicas=0

kubectl -n osm scale deployment mon --replicas=1

# In order to make this change persistent after reboots,

# you will have to update the file /etc/osm/docker/osm_pods/mon.yaml to reflect the change

# in the docker image, for instance:

# sudo sed -i "s/opensourcemano\/mon:.*/opensourcemano\/mon:develop/g" /etc/osm/docker/osm_pods/mon.yaml

# kubectl -n osm apply -f /etc/osm/docker/osm_pods/mon.yaml

3.3.3.4. Upgrading POL in K8s

git clone https://osm.etsi.org/gerrit/osm/POL

#you can then work in the cloned repo, apply patches with git pull, etc.

docker build POL -f POL/docker/Dockerfile -t opensourcemano/pol:develop --no-cache

kubectl -n osm patch deployment pol --patch '{"spec": {"template": {"spec": {"containers": [{"name": "pol", "image": "opensourcemano/pol:develop"}]}}}}'

kubectl -n osm scale deployment pol --replicas=0

kubectl -n osm scale deployment pol --replicas=1

# In order to make this change persistent after reboots,

# you will have to update the file /etc/osm/docker/osm_pods/pol.yaml to reflect the change

# in the docker image, for instance:

# sudo sed -i "s/opensourcemano\/pol:.*/opensourcemano\/pol:develop/g" /etc/osm/docker/osm_pods/pol.yaml

# kubectl -n osm apply -f /etc/osm/docker/osm_pods/pol.yaml

3.3.3.5. Upgrading NBI in K8s

git clone https://osm.etsi.org/gerrit/osm/NBI

#you can then work in the cloned repo, apply patches with git pull, etc.

docker build NBI -f NBI/Dockerfile.local -t opensourcemano/nbi:develop --no-cache

kubectl -n osm patch deployment nbi --patch '{"spec": {"template": {"spec": {"containers": [{"name": "nbi", "image": "opensourcemano/nbi:develop"}]}}}}'

kubectl -n osm scale deployment nbi --replicas=0

kubectl -n osm scale deployment nbi --replicas=1

# In order to make this change persistent after reboots,

# you will have to update the file /etc/osm/docker/osm_pods/nbi.yaml to reflect the change

# in the docker image, for instance:

# sudo sed -i "s/opensourcemano\/nbi:.*/opensourcemano\/nbi:develop/g" /etc/osm/docker/osm_pods/nbi.yaml

# kubectl -n osm apply -f /etc/osm/docker/osm_pods/nbi.yaml

3.3.3.6. Upgrading Next Generation UI in K8s

git clone https://osm.etsi.org/gerrit/osm/NG-UI

#you can then work in the cloned repo, apply patches with git pull, etc.

docker build NG-UI -f NG-UI/docker/Dockerfile -t opensourcemano/ng-ui:develop --no-cache

kubectl -n osm patch deployment ng-ui --patch '{"spec": {"template": {"spec": {"containers": [{"name": "ng-ui", "image": "opensourcemano/ng-ui:develop"}]}}}}'

kubectl -n osm scale deployment ng-ui --replicas=0

kubectl -n osm scale deployment ng-ui --replicas=1

# In order to make this change persistent after reboots,

# you will have to update the file /etc/osm/docker/osm_pods/ng-ui.yaml to reflect the change

# in the docker image, for instance:

# sudo sed -i "s/opensourcemano\/ng-ui:.*/opensourcemano\/ng-ui:develop/g" /etc/osm/docker/osm_pods/ng-ui.yaml

# kubectl -n osm apply -f /etc/osm/docker/osm_pods/ng-ui.yaml

3.4. Checking your installation

After some time, you will get a fresh OSM installation with its latest, pre-built docker images which are built daily. You can access to the UI in the following URL (user:admin, password: admin): http://1.2.3.4, replacing 1.2.3.4 by the IP address of your host.

As a result of the installation, different K8s objects (deployments, statefulsets, etc.) created in the host. You can check the status by running the following commands:

kubectl get all -n osm

To check the logs of any container:

kubectl logs -n osm deployments/lcm # for LCM

kubectl logs -n osm deployments/ng-ui # for NG-UI

kubectl logs -n osm deployments/mon # for MON

kubectl logs -n osm deployments/nbi # for NBI

kubectl logs -n osm deployments/pol # for POL

kubectl logs -n osm deployments/ro # for RO

kubectl logs -n osm deployments/keystone # for Keystone

kubectl logs -n osm deployments/grafana # for Grafana

kubectl logs -n osm statefulset/kafka # for Kafka

kubectl logs -n osm statefulset/mongodb-k8s # for MongoDB

kubectl logs -n osm statefulset/mysql # for Mysql

kubectl logs -n osm statefulset/prometheus # for Prometheus

kubectl logs -n osm statefulset/zookeeper # for Zookeeper

Finally, if you used the option --k8s_monitor to install an add-on to monitor the K8s cluster and OSM, you can check the status in this way.

kubectl get all -n monitoring

OSM client, a python-based CLI for OSM, will be available as well in the host machine. Via the OSM client, you can manage descriptors, NS and VIM complete lifecycle.

osm --help

3.5. Installing standalone OSM Client

The OSM Client is a client library and a command-line tool (based on Python) to operate OSM, which accesses OSM’s Northbound Interface (NBI) and lets you manage descriptors, VIMs, Network Services, Slices, etc. along with their whole lifecycle. In other words, the OSM Client is a sort of Swiss knife that provides a convenient access to all the functionality that OSM’s NBI offers.

Although the OSM Client is always available in the host machine after installation, it is sometimes convenient installing an OSM Client in another location, different from the OSM host, so that the access to the OSM services does not require OS-level/SSH credentials. Thus, in those cases where you have an OSM already installed in a remote server, you can still operate it from your local computer using the OSM Client.

There are two methods of installing the OSM client: via a Snap, or a Debian Package.

3.5.1. Snap Installation

On systems that support snaps, you can install the OSM client with the following command:

sudo snap install osmclient --channel 11.0/stable

There are tracks available for all releases. Omitting the channel will use the latest stable release version.

3.5.2. Debian Package Installation

In order to install the OSM Client in your local Linux machine, you should follow this procedure:

# Clean the previous repos that might exist

sudo sed -i "/osm-download.etsi.org/d" /etc/apt/sources.list

wget -qO - https://osm-download.etsi.org/repository/osm/debian/ReleaseTHIRTEEN/OSM%20ETSI%20Release%20Key.gpg | sudo apt-key add -

sudo add-apt-repository -y "deb [arch=amd64] https://osm-download.etsi.org/repository/osm/debian/ReleaseTHIRTEEN stable devops IM osmclient"

sudo apt-get update

sudo apt-get install -y python3-pip

sudo -H python3 -m pip install -U pip

sudo -H python3 -m pip install python-magic pyangbind verboselogs

sudo apt-get install python3-osmclient

3.5.3. Usage

Once installed, you can type osm to see a list of commands.

Since we are installing the OSM Client in a host different from OSM’s at a minimum you will need to specify the OSM host, either via an environment variable or via the osm command line. For instance, you can set your client to access an OSM host running at 10.80.80.5 by using:

export OSM_HOSTNAME="10.80.80.5"

For additional options, see osm --help for more info, and check our OSM client reference guide here