Day 0: VNF Instantiation & Management setup¶

Description of this phase¶

The objective of this section is to provide the guidelines for including all the necessary elements in the VNF Package for its successful instantiation and management setup, so it can be further configured at later stages.

The way to achieve this in OSM is to prepare the descriptor so that it accurately details the VNF requirements, prepare cloud-init scripts (if needed), and identify parameters that may have to be provided at later stages to further adapt to different infrastructures.

Day-0 Onboarding Guidelines¶

Building the initial package¶

The most straightforward way to build a VNF package from scratch is to use the existing script available a the OSM Devops repository. From a Linux/Unix-based system:

Install the OSM client of you don’t have it already.¶

If you have OSM installed, the client is added automatically, if not, there are different methods to install it as standalone. You can follow the guide here

Run the generator script with the desired options.¶

osm package-create [options] [vnf|ns] [name]

Most common options are shown in the commands help

--base-directory TEXT (NS/VNF/NST) Set the location for package creation. Default: "."

--image TEXT (VNF) Set the name of the vdu image. Default "image-name"

--vdus INTEGER (VNF) Set the number of vdus in a VNF. Default 1

--vcpu INTEGER (VNF) Set the number of virtual CPUs in a vdu. Default 1

--memory INTEGER (VNF) Set the memory size (MB) of the vdu. Default 1024

--storage INTEGER (VNF) Set the disk size (GB) of the vdu. Default 10

--interfaces INTEGER (VNF) Set the number of additional interfaces apart from the management interface. Default 0

--vendor TEXT (NS/VNF) Set the descriptor vendor. Default "OSM"

--override (NS/VNF/NST) Flag for overriding the package if exists.

--detailed (NS/VNF/NST) Flag for generating descriptor .yaml with all possible commented options

--netslice-subnets INTEGER (NST) Number of netslice subnets. Default 1

--netslice-vlds INTEGER (NST) Number of netslice vlds. Default 1

-h, --help Show this message and exit.

For example:

# For the VNF Package

osm package-create --base-directory /home/ubuntu --image myVNF.qcow2 --vcpu 1 --memory 4096 --storage 50 --interfaces 2 --vendor OSM vnf vLB

# For the NS Package

osm package-create --base-directory /home/ubuntu --vendor OSM ns vLB

Note that there are separate options for VNF and NS packages. A Network Service Package that refers to the VNF Packages is always needed in OSM to be able to instantiate it the constituent VNFs. So the above example will create, in the /home/ubuntu folder:

vLB_vnf→ VNFD FoldervLB_ns→ NSD Folder

The VNFD Folder will contain the YAML file which models the VNF. This should be further edited to achieve the desired characteristics.

Modelling advanced topologies¶

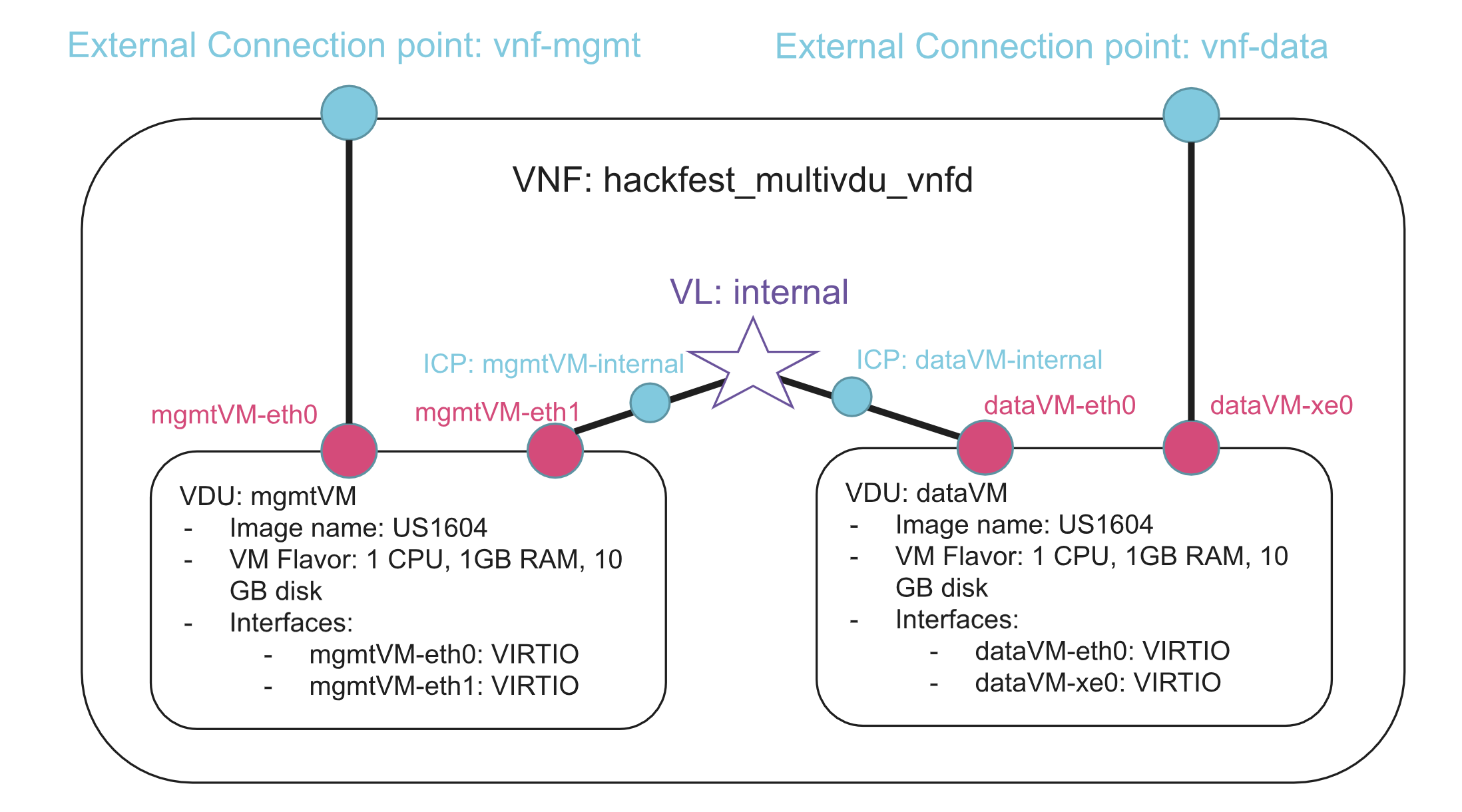

When dealing with multiple VDUs inside a VNF, it is important to understand the differences between external and internal connection points (CPs) and virtual link descriptors (VLDs).

| Component | Definition | Modelled at |

|---|---|---|

| Internal VLD | Network that interconnects VDUs within a VNF | VNFD |

| External VLD | Network that interconnects different VNFs within a NS | NSD |

| Internal CP | Element internal to a VNF, maps VDU interfaces to internal VLDs | VNFD |

| External CP | Element exposed externally by a VNF, maps VDU interfaces to external VLDs | NSD |

As VNF Package builders, we should clearly identify interfaces that i) are internal to the VNF and used to interconnect our own VDUs through internal VLDs, and ii) those we want to expose to other VNFs within a Network Service, using external VLDs.

In this example from the 5th OSM Hackfest, we are building the following Multi-VDU topology:

The VNFD would look like this:

vnfd:

description: A VNF consisting of 2 VDUs connected to an internal VL

# The Deployment Flavour (DF) "ties together" all the other definitions

df:

- id: default-df

instantiation-level:

- id: default-instantiation-level

vdu-level:

- number-of-instances: 1

vdu-id: mgmtVM

- number-of-instances: 1

vdu-id: dataVM

vdu-profile:

- id: mgmtVM

min-number-of-instances: 1

- id: dataVM

min-number-of-instances: 1

# External CPs are exposed externally, to be referred at the NSD

ext-cpd:

- id: vnf-mgmt-ext

int-cpd:

cpd: mgmtVM-eth0-int

vdu-id: mgmtVM

- id: vnf-data-ext

int-cpd:

cpd: dataVM-xe0-int

vdu-id: dataVM

id: hackfest_multivdu-vnf

# Internal VLDs are defined globally at the VNFD

int-virtual-link-desc:

- id: internal

# A external CP should be used for VNF management

mgmt-cp: vnf-mgmt-ext

product-name: hackfest_multivdu-vnf

sw-image-desc:

- id: US1604

image: US1604

name: US1604

# Inside the VDU block, multiple VDUs, and their internal CPs are modelled

vdu:

- id: mgmtVM

# Internal CPs are modelled inside each VDU

int-cpd:

- id: mgmtVM-eth0-int

virtual-network-interface-requirement:

- name: mgmtVM-eth0

position: 1

virtual-interface:

type: PARAVIRT

- id: mgmtVM-eth1-int

int-virtual-link-desc: internal

virtual-network-interface-requirement:

- name: mgmtVM-eth1

position: 2

virtual-interface:

type: PARAVIRT

name: mgmtVM

sw-image-desc: US1604

virtual-compute-desc: mgmtVM-compute

virtual-storage-desc:

- mgmtVM-storage

- id: dataVM

# Internal CPs are modelled inside each VDU

int-cpd:

- id: dataVM-eth0-int

int-virtual-link-desc: internal

virtual-network-interface-requirement:

- name: dataVM-eth0

position: 1

virtual-interface:

type: PARAVIRT

- id: dataVM-xe0-int

virtual-network-interface-requirement:

- name: dataVM-xe0

position: 2

virtual-interface:

type: PARAVIRT

name: dataVM

sw-image-desc: US1604

virtual-compute-desc: dataVM-compute

virtual-storage-desc:

- dataVM-storage

version: '1.0'

virtual-compute-desc:

- id: mgmtVM-compute

virtual-memory:

size: 1.0

virtual-cpu:

num-virtual-cpu: 1

- id: dataVM-compute

virtual-memory:

size: 1.0

virtual-cpu:

num-virtual-cpu: 1

virtual-storage-desc:

- id: mgmtVM-storage

size-of-storage: 10

- id: dataVM-storage

size-of-storage: 10

As an additional reference, let’s take a look at this Network Service Descriptor (NSD), where connections between VNFs are modelled using external CPs mapped to external VLDs like this:

nsd:

nsd:

- description: NS with 2 VNFs connected by datanet and mgmtnet VLs

id: hackfest_multivdu-ns

name: hackfest_multivdu-ns

version: '1.0'

# External VLDs are defined globally:

virtual-link-desc:

- id: mgmtnet

mgmt-network: true

- id: datanet

vnfd-id:

- hackfest_multivdu-vnf

df:

- id: default-df

# External VLD mappings to CPs are defined inside the deployment flavour's vnf-profile:

vnf-profile:

- id: '1'

virtual-link-connectivity:

- constituent-cpd-id:

- constituent-base-element-id: '1'

constituent-cpd-id: vnf-mgmt-ext

virtual-link-profile-id: mgmtnet

- constituent-cpd-id:

- constituent-base-element-id: '1'

constituent-cpd-id: vnf-data-ext

virtual-link-profile-id: datanet

vnfd-id: hackfest_multivdu-vnf

- id: '2'

virtual-link-connectivity:

- constituent-cpd-id:

- constituent-base-element-id: '2'

constituent-cpd-id: vnf-mgmt-ext

virtual-link-profile-id: mgmtnet

- constituent-cpd-id:

- constituent-base-element-id: '2'

constituent-cpd-id: vnf-data-ext

virtual-link-profile-id: datanet

vnfd-id: hackfest_multivdu-vnf

Modelling specific networking requirements¶

Even though it is not recommended to hard-code networking values in order to maximize the VNF Package uniqueness, there may be some freedom for doing this at internal VLDs, especially when they are not externally accessible by other VNFs and not directly accessible from the management network.

The former IP Profiles feature, today implemented in SOL006 through the Virtual-link Profiles extensions inside the Connection Point blocks, allows us to set some subnet specifics that can become useful. Further settings can be found at the OSM Information Model Documentation. The following VNFD extract can be used as a reference:

vnfd:

description: A VNF consisting of 2 VDUs connected to an internal VL

df:

...

virtual-link-profile:

- id: internal # internal VLD ID goes here

virtual-link-protocol-data:

l3-protocol-data:

cidr: 192.168.100.0/24

dhcp-enabled: true

Specific IP and MAC addresses can also be set inside the internal CP block, although this practice is not recommended unless we use it in isolated connection points.

TODO: Example of setting IP & MAC Addresses with new SOL006 model

Building and adding cloud-init scripts¶

Cloud-init basics¶

Cloud-init is normally used for Day-0 operations like:

Setting a default locale

Setting an instance hostname

Generating instance SSH private keys or defining passwords

Adding SSH keys to a user’s .ssh/authorized_keys so they can log in

Setting up ephemeral mount points

Configuring network devices

Adding users and groups

Adding files

Cloud-init scripts are referred at the VDU level. These can be defined inline or can be included in the cloud_init folder of the VNF package, then referred in the descriptor.

For inline cloud-init definition, follow this:

vnfd:

...

vdu:

- ...

cloud-init: |

#cloud-config

...

For external cloud-init definition, proceed like this:

vnfd:

...

vdu:

- ...

cloud-init: cloud_init_filename

Its content can have a number of formats, including #cloud-config and bash. For example, any of the following scripts sets a password in Linux.

#cloud-config

hostname: lb_vdu

password: osm2018

chpasswd: { expire: False }

ssh_pwauth: True

#cloud-config

hostname: lb_vdu

chpasswd:

list: |

ubuntu:osm2018

expire: False

Additional information about cloud-init can be found in this documentation.

Parametrizing Cloud-init files¶

Beginning in OSM version 5.0.3, cloud-init files can be parametrized by using double curly brackets. For example:

#cloud-config

hostname: lb_vdu

password: {{ password }}

chpasswd: { expire: False }

ssh_pwauth: True

Such variables can then be passed at instantiation time by referring the VNF index it applies to, as well as the name and value of the variable.

osm ns-create ... --config "{additionalParamsForVnf: [{member-vnf-index: '1', additionalParams:{password: 'secret'}}]}"

When dealing with multiple variables, it might be useful to pass a YAML file instead.

osm ns-create ... --config-file vars.yaml

Please note that variable naming convention follows Jinja2 (Python identifiers), so hyphens are not allowed.

Support for Configuration Drive¶

Besides cloud-init being provided as userdata through a metadata service, some VNFs will require to store the metadata locally on a configuration drive.

The support for this is available at the VNFD model, as follows:

vnfd:

...

vdu:

- ...

supplemental-boot-data:

boot-data-drive: 'true'

Guidelines for EPA requirements¶

Most EPA features can be specified at the VDU descriptor level as requirements in the virtual-compute, virtual-cpu and virtual-memory descriptors, which will be then translated to the appropriate request through the VIM connector. Please note that the NFVI should be pre-configured to support these EPA capabilities.

Huge Pages¶

Huge pages are requested as follows:

vnfd:

...

vdu:

- ...

virtual-memory:

mempage-size: LARGE

...

The mempage-size attribute can take any of these values:

LARGE: Require hugepages (either 2MB or 1GB)

SMALL: Doesn’t require hugepages

SIZE_2MB: Requires 2MB hugepages

SIZE_1GB: Requires 1GB hugepages

PREFER_LARGE: Application prefers hugepages

CPU Pinning¶

CPU pinning allows for different settings related to vCPU assignment and hyper threading:

vnfd:

...

vdu:

- ...

virtual-cpu:

policy: DEDICATED

thread-policy: AVOID

...

CPU pinning policy describes association between virtual CPUs in guest and the physical CPUs in the host. Valid values are:

DEDICATED: Virtual CPUs are pinned to physical CPUs

SHARED: Multiple VMs may share the same physical CPUs.

ANY: Any policy is acceptable for the VM

CPU thread pinning policy describes how to place the guest CPUs when the host supports hyper threads. Valid values are:

AVOID: Avoids placing a guest on a host with threads.

SEPARATE: Places vCPUs on separate cores, and avoids placing two vCPUs on two threads of same core.

ISOLATE: Places each vCPU on a different core, and places no vCPUs from a different guest on the same core.

PREFER: Attempts to place vCPUs on threads of the same core.

NUMA Topology Awareness¶

This policy defines if the guest should be run on a host with one NUMA node or multiple NUMA nodes.

vnfd:

...

vdu:

- ...

virtual-memory:

numa-node-policy:

node-cnt: 2

mem-policy: STRICT

node:

- id: 0

memory-mb: 2048

num-cores: 1

- id: 1

memory-mb: 2048

num-cores: 1

...

node-cnt defines the number of NUMA nodes to expose to the VM, while mem-policy defines if the memory should be allocated strictly from the ‘local’ NUMA node (STRICT) or not necessarily from that node (PREFERRED).

The rest of the settings request a specific mapping between the NUMA nodes and the VM resources and can be explored at detail in the OSM Information Model Documentation

SR-IOV and PCI-Passthrough¶

Dedicated interface resources can be requested at the VDU interface level.

vnfd:

...

vdu:

- ...

int-cpd:

- ...

virtual-network-interface-requirement:

- name: eth0

virtual-interface:

type: SR-IOV

Valid values for type, which specifies the type of virtual interface between VM and host, are:

PARAVIRT : Use the default paravirtualized interface for the VIM (virtio, vmxnet3, etc.).

PCI-PASSTHROUGH : Use PCI-PASSTHROUGH interface.

SR-IOV : Use SR-IOV interface.

E1000 : Emulate E1000 interface.

RTL8139 : Emulate RTL8139 interface.

PCNET : Emulate PCNET interface.

Managing alternative images for specific VIM types¶

The image name specified at the VDU level is expected to be either located at the images folder within the VNF package, or at the VIM catalogue.

Alternative images can be specified and mapped to different VIM types, so that they are used whenever the VNF package is instantiated over the given VIM type.

In the following example, the ubuntu20 image is used by default (any VIM), but a different image is used if the VIM type is AWS.

vnfd:

...

vdu:

- ...

sw-image-desc:

- id: ubuntu20

image: ubuntu20

name: ubuntu20

- id: ubuntuAWS

image: ubuntu/images/hvm-ssd/ubuntu-artful-17.10-amd64-server-20180509

name: ubuntuAWS

vim-type: aws

Updating and Testing Instantiation of the VNF Package¶

Once the VNF Descriptor has been updated with all the Day-0 requirements, its folder needs to be repackaged. This can be done using the OSM CLI, using a command that packages, validates and uploads the package to the catalogue.

osm vnfpkg-create [VNF Folder]

A Network Service package containing at least this single VNF needs to be used to instantiate the VNF. This could be generated with the OSM CLI command described earlier.

Remember the objectives of this phase:

Instantiating the VNF with all the required VDUs, images, initial (unconfigured) state and NFVI requirements.

Making the VNF manageable from OSM (OSM should have SSH access to the management interfaces, for example)

To test this out, the NS can be launched using the OSM client, like this:

osm ns-create --ns_name [ns name] --nsd_name [nsd name] --vim_account [vim name] \

--ssh_keys [comma separated list of public key files to inject to vnfs]

At launch time, extra instantiation parameters can be passed so that the VNF can be adapted to the particular instantiation environment or to achieve a proper inter-operation with other VNFs within the specific NS. More information about these parameters will be revised during the next chapter as part of Day-1 objectives, or can be reviewed here.

The following sections will provide details on how to further populate the VNF Package to automate Day 1/2 operations.